AI-Generated Art: Look What They Made; They Made it for me – Happy Technology! – BeNjamyn Upshaw-Ruffner

DECEMBER 10, 2021

A friend of mine once exclaimed “this is the best art I’ve ever seen!” What do you think they were talking about? If you guessed that it was artwork being generated by an AI, then you’d be right. I, unfortunately, cannot share these specific works, but they were amazingly beautiful; far more elaborate and fascinating than my previous engagement with computer-created art. Have computers gotten better at making art over the years?

Well, first I wanted to find out how exactly it works. I don’t have any programming skills, but after taking a course about ethics and epistemology in the digital age, I do understand how neural networks work. My understanding is that websites like Artbreeder or Nightcafe use neural networks to generate their art. My personal favorite method has been using an open-source program called VQGAN+CLIP to generate these images using typed-up prompts or titles. If this is starting to sound complicated, let’s take a step back.

To put it simply, a neural network is a system with many nodes that talk to each other. There can be hundreds, thousands, any number of these nodes. The nodes look at all the “training data”: the stuff that we give our network access to. Each node has a connection to the other nodes with varying strengths. Think of this as a network of friends and acquaintances; the stronger the relationship, the more weight there is for that connection. Eventually, the computer uses all the connected nodes to achieve an output based on the training data. In our case, that output is the image we get, and the training data are all the databases for images on the internet (I’m not 100% sure about which exact databases are used, though). In the end, what we get is something beautifully absurd, disgustingly haunting, or somewhere in-between.

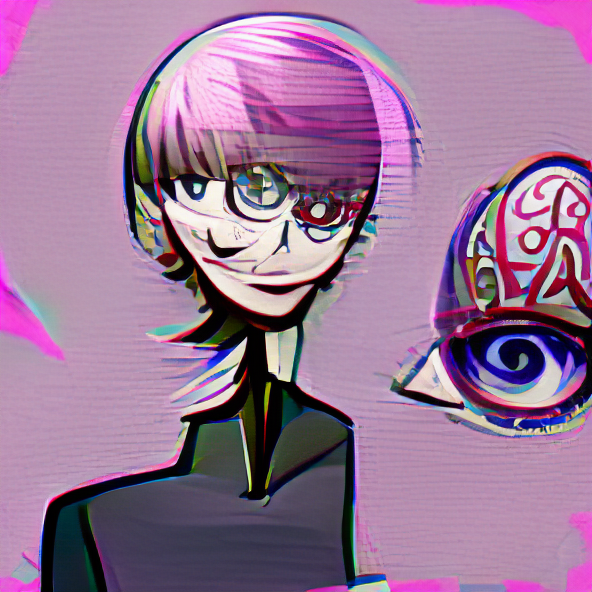

Look at these examples of strange discomfort:

And finally, an image I have retroactively titled “Skin”:

I used Artbreeder to create these. Artbreeder seems to work by combining images together to generate something new. These images are the kinds of AI images that make me deeply uncomfortable, though.

I think that art doesn’t necessarily need to have a designed purpose, but the creator usually has some intention in mind; design is usually deliberate. In the case of these images, everything seems incidental. Can we really ascribe intentionality and deliberation to a digital machine? If so, how might that differ from using our brain? In what ways does our use of and reliance on digital technologies affect how we think about and interact with others, and with the world? I want to let these questions hang over us as we think about these AI-generated pictures.

My discomfort with these pictures is due to several factors. First, the resolution is very low, so details are hard to parse out. Because of this, there’s a lack of detail in the “subjects” of these pictures. They also tend to use forms and textures that we’re familiar with: sand, trees, cloth, metal, cushion, wood, skin, etc. When looking at these images, I’m recognizing them as both familiar and completely alien; it’s a contradiction!

Adding to my frustration is how these images mirror the perspective we would have if we were looking at photos. In number 7 for example, we see a depth of field occur where part of the mixed-up subject is foregrounded, while the rest is in the background. We can also see how this subject is lit from the right, and a sort of shadow is cast on the left. It looks like something that is physically occupying space, yet we can’t make sense of it.

The aspects that we can make some sense of, like parts of a hand maybe, or perhaps a baby’s head, feel exceptionally uncomfortable for me. I think this is because the subject matter seems “cut off” from its place. It feels like dismemberment, even though the subject is “together,” so to speak. I think picture number 7 is especially horrifying because the distortion is occurring with the human body, something so fundamental to our existence. What sends it over the edge for me are the eyes. This is true for any of these Artbreeder images; to me, the grayish figure in number 5 is also uncomfortable to look at. I think that I derive an understanding based on a creature’s eyes. There’s this psychic force that calls upon me to look back. When I make eye contact with my dog, a cat, a horse, etc., I think “wow, there’s another soul behind those eyes.” With people, friends, family, before interactions even begin, our eyes meet and there is this unspoken understanding of presence. But these images fly in the face of that by positioning eyes on utterly indiscernible subjects. The nonsense of it all makes the subjects of these images scary to me on some deep, ontological level. It’s like a form of life has been distorted to the point of being unrecognizable. I can only call it art because it has such a visceral effect on me. But what if the distortions were more beautiful than they were disturbing?

Many online have written about how AI-generated art seems to occupy a middle ground between abstract and representational art. Nowhere is this better exemplified than in the images I was able to generate using text prompts via a process called VQGAN+CLIP.

Here are my results:

And finally:

I wanted to get very creative, diverse, and elaborate with the final prompt. I think this illustrates how much we can put into the prompts and still arrive at something interesting. There’s an almost painterly quality to these ones. By using the VQGAN+CLIP process, I was able to come up with images that are visually interesting, while not being super creepy in the way that Artbreeder’s images tend to be. The trade-off is that when you get the neural network to make artistic images using VQGAN+CLIP, it takes several minutes depending on how many iterations you want to program to run. I found 300 to be a good number.

What seems to be happening is that the network starts with random noise, like static. Then, the program runs and makes many changes to the noise until it is 99% sure that it’s looking at whatever prompt you gave. This can lead to some super interesting visuals that you can give subject matter to. What will the image look like if you stick to concepts like death, truth, justice, etc.? What if you stick to something very concrete and specific, like an animal or object?

I find that we often ask these questions of ourselves when we make art. When I write, for example, I’m always thinking of how I can evoke certain emotions, how I can build words and sounds together. Do you think that these neural networks are doing something similar?

What if, when we make art, we’re starting from the static? Our stasis begins its turn into action as ideas acquiesce into matter through our bodies. The noise in our minds is focused, channeled into new grounds. Before long, the blank document, the blank canvas, the empty stage, they are all capacious with life. These are emotions expressed, creation-willed, knowledge substantiated. These art objects become our pneumatic conduits as we breathe them out, and breathe them in.

Are machines allowed to participate?